August 3, 2023

A Visual Feast

Computer scientists bring fascinating new methods to SIGGRAPH 2023

3D light sculptures. Tsunami waves on a beach. Previewing color tattoos. Contributions from the Bickel and Wojtan groups at the Institute of Science and Technology Austria (ISTA) to the 2023 SIGGRAPH conference tackle an impressive variety of classic and novel questions. While their focuses range from computer graphics to fabrication methods, the computer scientists are united in finding cost-effective, innovative solutions and empowering users.

SIGGRAPH is the top worldwide annual convention for computer graphics and interactive techniques, bringing together the latest developments in the field. This year saw broad participation from Institute of Science and Technology Austria (ISTA) scientists once again.

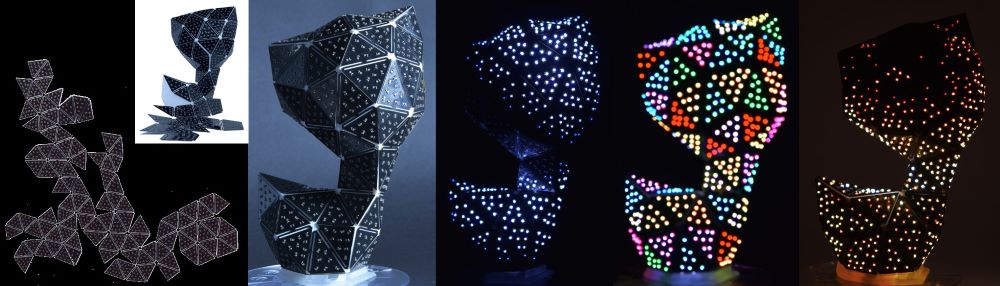

PCBend: a new accessible pipeline for 3D light sculptures

In this day and age, the importance of light as an element in design, art, and architecture is undisputed. However, designing and manufacturing light-covered 3D objects has been both prohibitively expensive and tedious for the average user. This problem caught the attention of Manas Bhargava, a PhD student in the Bickel group at ISTA, who set about developing an easy-to-use and affordable pipeline to create and fabricate such structures. Now, Bhargava and colleagues at ISTA as well as the University of Lorraine, France, have introduced PCBend, a system that achieves exactly that.

Flat (2D) LED circuit boards are inexpensive and easily produced, unlike curved (3D) circuits. To keep costs low and make use of existing manufacturing chains, the team first found a method to “flatten” the target object design. “Unfolding a 3D object made of triangles is a classic problem, with solutions inspired by origami,” explains Bhargava. “But we also had to account for the physical constraints imposed by the circuit connections between two triangles—unlike folded paper, they can break.” Using woodworking techniques, the team created special hinges that would allow the printed circuit board to bend without severing the circuits. The team’s program further solved the circuit layout problem, connecting all the LEDs along a single path.

Once the 2D design mesh is set, it is manufactured and the user reassembles and programs the light patterns. “Our pipeline is simple to use, so others can easily try out their own ideas,” continues Bhargava. “I can’t wait to see what they do!” Possible applications could be in art, theatre, and show elements of concerts.

Watch the corresponding video on YouTube

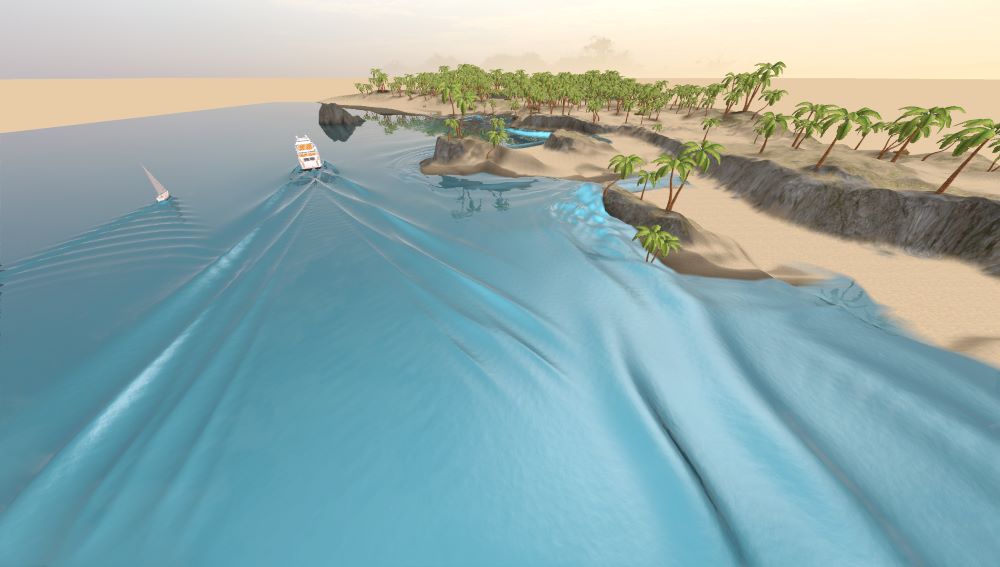

New wave simulation method bridges deep and shallow waters

The next project dives into previously unreachable depths. Equations describing fluidmotion have been known since the 1800s. However, though mathematically beautiful, these equations are too computationally expensive to be of use in water wave simulations. In the past, scientists and graphic designers have therefore turned to Airy theory, which describes wave patterns perfectly in deep water, or the shallow water equations, which can handle anything near a shore. Each excels in their respective area but fails in the other. Previously, graphics experts had to choose one type of equation and used additional effects to hide any glaring visual errors. Now, Professor Chris Wojtan along with long-time collaborator and ISTA alumnus Stefan Jeschke have come up with the first practical method capable of simulating both deep and shallow water effects, as well as interactions between deep and shallow water. Essentially, they combine the two models, taking advantage of the strengths of each while minimizing their weaknesses.

Though “gluing” the models together is in one sense what the collaborators did, deciding which model to use where (i.e. what constitutes deep versus shallow water) required finesse and a profound knowledge of the mathematical equations behind the models. “Depth in the simulations is not just the distance from surface to floor,” Wojtan explains. “The wavelength—the distance from one wave peak to the next—also plays a role.” With their new method, the team can simulate previously unachievable effects such as deep-water tsunami waves flooding a beach or the wake of a boat running up the shoreline—all in real time. “On a practical level, the new model is still well suited for parallel processing, allowing us to achieve real-time frame rates on modern graphics processing units,” adds Jeschke.

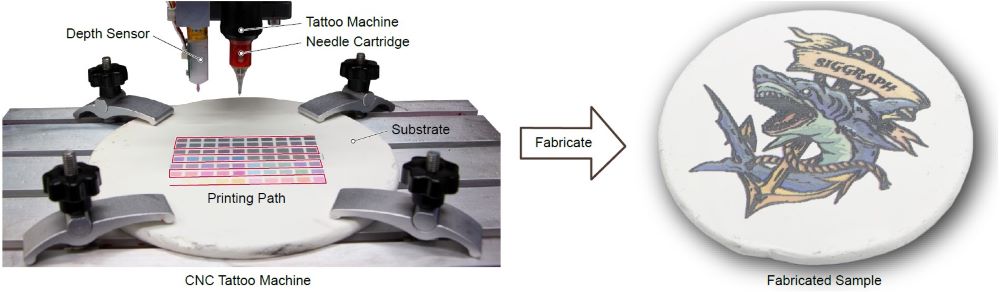

Tattoo previews

Another publication by the Bickel group demonstrates the variety of topics presented at SIGGRAPH. Anticipating how colors will look when tattooed depends on an artist’s experience, but the permanence of tattoos means artists are unable to experiment. Now, the Bickel group and a collaborator have developed the first-ever model that accurately predicts how a tattoo will appear on various substrates. Michal Piovarči, a postdoc in the Bickel group, led the project, combining a deep understanding of color modeling with practical fabrication and programming methods.

To develop the predictive color models, Piovarči took standard equations and adapted them to fit the tattooing environment. His key observation was that the substrate acts as an additional color that mixes with the tattooed inks. Once the basic models were established, they defined the model’s parameters by performing actual tests. To do so, Piovarči built a programmable tattooing apparatus and developed a silicone-cornstarch substrate to tattoo. “For me, the most surprising—and satisfying—part was the range of colors we were able to achieve, once we understood how to optimize the ink combinations for each substrate,” says Piovarči.

The team programmed additional features, such as suggestions for alternative, complementary colors that are more visible than the original design, as well as optimized color selection for tattoo cover-ups. This could further augment artists’ abilities to create tattoos in beautiful colors.

Their technique is not just valuable for its potential applications: “Scientifically, it’s exciting to gain a better understanding of how inks embedded in skin influence the light transport, as well as computationally modeling the appearance and validating the model experimentally,” concludes Professor Bernd Bickel.

Watch the corresponding video on YouTube

Additional work at SIGGRAPH

The Bickel and Wojtan groups will present several other works at SIGGRAPH 2023. The papers, accompanying videos, and other resources can be found on the Visual Computing website, and include projects such as:

- Gloss-Aware Color Correction for 3D Printing

- Stealth Shaper: Reflectivity Optimization as Surface Stylization

- Procedural Metamaterials

The last project, a collaboration between ISTA and MIT, presents a novel, easy-to-use interface for designing metamaterials with unique properties. Additional information is available here.

Publications:

Marco Freire, Manas Bhargava, Camille Schreck, Pierre-Alexandre Hugron, Bernd Bickel, & Sylvain Lefebvre. 2023. PCBend: Light Up Your 3D Shapes With Foldable Circuit Boards. ACM Transactions on Graphics (SIGGRAPH 2023). DOI: 10.1145/3592411

Stefan Jeschke & Chris Wojtan. 2023. Generalizing Shallow Water Simulations with Dispersive Surface Waves. ACM Transactions on Graphics (SIGGRAPH 2023). DOI: 10.1145/3592098

Michal Piovarči, Alexandre Chapiro, & Bernd Bickel. 2023. Skin-Screen: A Computational Fabrication Framework for Color Tattoos. ACM Transactions on Graphics (SIGGRAPH 2023). DOI: 10.1145/3592432

Funding Information:

PCBend: Light Up Your 3D Shapes With Foldable Circuit Boards: This project was supported by the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation program (Grant Agreement No. 715767 -– MATERIALIZABLE).

Generalizing Shallow Water Simulations with Dispersive Surface Waves: This project was funded in part by the European Research Council (ERC Consolidator Grant 101045083 CoDiNA).

Skin-Screen: A Computational Fabrication Framework for Color Tattoos: This work was graciously supported by the FWF Lise Meitner (Grant M 3319).